There was a time where x86 hardware systems and the applications and operating systems chosen to be installed upon them were considered good, but not 'bet your business' great. Reliability was less than ideal. Early deployments saw smaller numbers of servers, and each and every server counted. The applications themselves were not decomposed well enough to share the transaction processing, so failures of any server impacted actual production. Candidly I am not sure if it was the hardware or software that was mostly at fault, or a combination of both, but the concept of server system failures was a very real topic. High Availability or "HA" configurations were considered standard operating procedure for most applications.

The server vendors responded to this negative challenge by upping their game, designing much more robust server platforms and using higher quality components, connectors, designs, etc. The operating system vendors rose to the challenge by segmenting their offerings to offer industrial strength 'server' distributions and 'certified platform' hardware compatibility programs. This made a huge difference and TODAY, modern servers rarely fail. They run, they run hard and are perceived to be rock solid if provisioned properly.

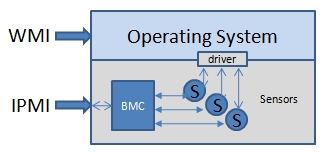

Why the history? Because in these early times for servers, their less than favorable reliability characteristics required some form of auxillary bare metal 'out of band' access for these servers to correct operational failures at the hardware level. Technologies such as Intel's IPMI and HP's ILO became commonplace discussion when looking to build data center solutions with remote remediation capabilities. This was provided by an additional small CPU chip called a BMC that required no loading, no firmware, nothing but power to communicate sensor and status data with the outside world. The ability to Reboot a server in the middle of the night over the internet from the sys admin's house was all the rage. Technologies like Serial Console and KVM were the starting point, followed by these Out-of-Band (ILO & IPMI).

Move the clock forward to today, and you'll see that KVM, IPMI & ILO are interesting technologies and critical for specific devices which are still considered critical to core businesses as they are mostly applicable when a server is NOT running any operating system or the server has halted and is no longer 'on the net'. In most all other times, when the operating system itself IS running and the servers are on the network and accessible, server makers have supplied standard drivers to access all of the sensors and other hardware features of the motherboard and allow in-band remote access with technologies such as SSH and RDP.

Today, it makes very little difference whether a monitoring system uses operating system calls or out-of-band access tools. The same sensor and status information is available through both sets of technologies and it depends more on how the servers are physically deployed and connected. Remember, a huge percentage of Out-of-Band ports remain unconnected on the back of product servers. Many customers consider the second OOB connection to be costly and redundant in all but the worst/extreme failure conditions. (BUT critically important for certain type of equipment, such as any in-house DNS servers, or perhaps a SAN storage director)