I am routinely surprised at how difficult it can be to determine the total energy consumption for many data centers. Stand-alone data centers can at least look at the monthly bill from the utility, but as the Green Grid points out when discussing PUE metrics, continuous monitoring is preferred whenever possible. Measurement in an environment where resources, such as chilled water, are shared with non-data center facilities can be even more complex. I’ll discuss that topic in the coming weeks. For now, I want to look just at the stand-alone data center.

In general, the choices are pretty simple for a green-field installation. The only real requirement is commitment to buying the instrumentation. Solid-core CTs are cheaper, and generally smaller for the same current range. Wiring in the voltage is easy. Retrofits are more interesting. Nobody likes to work on a hot electrical system, but shutting down a main power feed is a risky process, even with redundant systems.

One logical metering point is the output of the main transfer switches. Many folk assume they already have power metering on their ATS. It has an LCD panel showing various electrical readings, after all. Unfortunately, more often than not, only voltage is measured. That’s all the switch needs to do its job. Seems that the advanced metering option is either overlooked or the first thing to go when trimming the budget.

Retrofitting the advanced option into an ATS is not trivial. Clamping on a few CTs might not seem tough, but the metering module itself generally has to be completely swapped out. Full shut-down time.

A separate revenue-grade power meter is not terribly expensive these days. In some cases it may even be competitive with the advanced metering option from your ATS manufacturer. Meters that include power-quality metrics such as THD can be found for less than $3K, CTs included. Such a meter could be installed directly on the output of the ATS, but the input of the main distribution panel is generally a better option.

Clamping on the CTs is relatively straightforward, even on a live system, though it can be tricky if the cabling is wired too tightly. Slim, flexible Rogowski coils are an excellent option in this case. A bit pricier, but ease of installation can make back the difference in labor pretty quickly.

For voltage sensing, distribution panels often have spare output terminals available. This is ideal in a retrofit situation, and desirable even in a new install. Odds are the breaker rating is higher than the meter could handle, so don’t forget to include protection fusing. If no spare circuit is available, you can perhaps find one that is at least non-critical, such as a lighting circuit, and could be shut down long enough to tie in the voltage.

Worst-case retrofit scenario, you have no local voltage connections available. CTs alone are better than nothing. A good monitoring system can combine those readings with nominal voltages, or voltages from the ATS, to provide at least apparent power. Most meters can be powered from a single-phase voltage supply, even 110V wall power. I recommend springing for the full power meter even in this case. At some point you’ll likely have some down time, hopefully scheduled, on this circuit, and you can perform the full proper wiring at that time.

The final decision about your meter is whether to get the display. If your goal is continuous measurement (i.e., monitoring), the meter should be communicating with a monitoring system. The LED or LCD display will at best provide you a secondary check on the readings. The option also complicates the installation, because you need some kind of panel mounting to hold it and make it visible. It can become more of a space issue than one might expect for a 25-sq. inch display. Avoiding the full display output saves on the cost of the meter, and saves even more on the installation labor.

Look for a meter with simple LEDs or some other indicator to help identify wiring problems like mis-matched current and voltage phases. If the meter is a transducer only, have the monitoring system up and running, and communication wiring run, before installing the meter, so you can use its readings to troubleshoot the wiring. Nobody wants to open that panel twice!

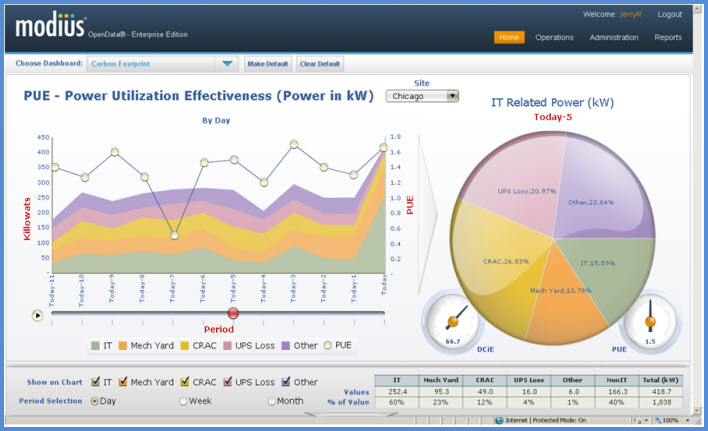

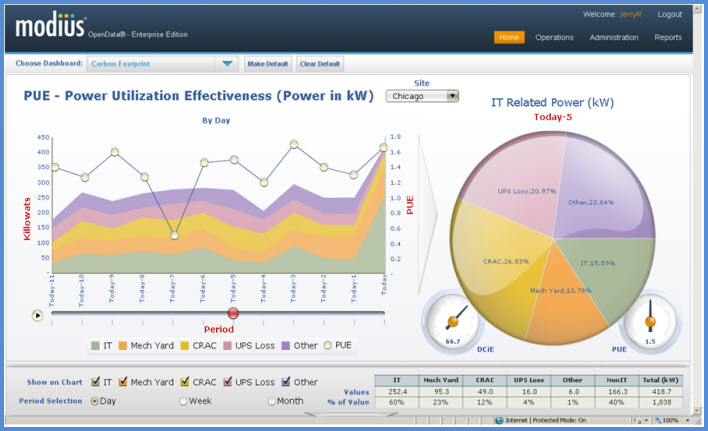

Continuous monitoring of total power is critical to managing the capacity and efficiency of a data center. Whether your concern is PUE, carbon footprint, or simply reducing the energy bill, the monthly report from the utility won’t always provide the information you need to identify specific opportunities for improvement. Even smart meters might not be granular enough to identify short-term surges, and won’t allow you to correlate the data with that from other equipment in your facility. It’s hard to justify skimping on one or two meters for a new data center. Even in a retrofit situation, consider a dedicated meter as an early step in your efficiency efforts.

Modius OpenData has recently reached an intriguing milestone. Over half of our customers are currently running the OpenData® Enterprise Edition server software on virtual machines (VM). Most new installations are starting out virtualized, and a number of existing customers have successfully migrated from a hard server to a virtual one.

Modius OpenData has recently reached an intriguing milestone. Over half of our customers are currently running the OpenData® Enterprise Edition server software on virtual machines (VM). Most new installations are starting out virtualized, and a number of existing customers have successfully migrated from a hard server to a virtual one. Many enterprise IT departments are moving to a virtualized environment internally. In many cases, it has been made very difficult for a department to purchase new actual hardware. The internal “cloud” infrastructure allows for more efficient usage of resources such as memory, CPU cycles, and storage. Ultimately, this translates to more efficient use of electrical power and better capacity management. These same goals are a big part of OpenData’s fundamental purpose, so it only makes sense that the software would play well with a virtualized IT infrastructure.

Many enterprise IT departments are moving to a virtualized environment internally. In many cases, it has been made very difficult for a department to purchase new actual hardware. The internal “cloud” infrastructure allows for more efficient usage of resources such as memory, CPU cycles, and storage. Ultimately, this translates to more efficient use of electrical power and better capacity management. These same goals are a big part of OpenData’s fundamental purpose, so it only makes sense that the software would play well with a virtualized IT infrastructure.