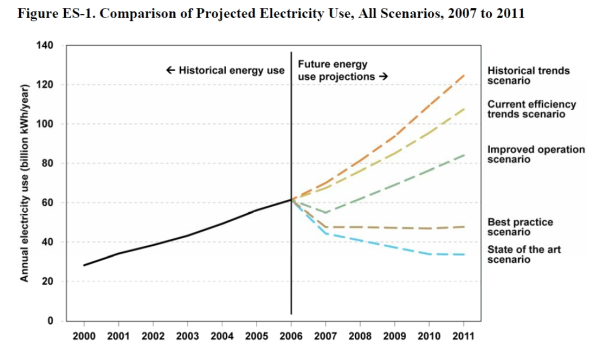

OK we have heard about the 'Greening' world around us, the price of power, the costs of cooling, the need for energy efficiency and ultimately The Green Grid's "PUE" KPI for a few years now. What originally sounded like a great way to definitively calculate the energy efficiency of getting IT work done, still seems like a great way to do so, but also seems like just the START of the journey...

Remembering that alot of work went in to the creation of PUE, it is considered by many to be a great place to start TODAY towards the goal of optimizing energy usage. Remember, you can't optimize that which you don't understand. That said, PUE may not be viewed down the road as the single best metric, but for now, it is MUCH better than what we had just a few years ago. Nothing. PUE is a metric that is well understood and can be determined for ANY END-USER that chooses to calculate it. It can be calculated in real-time using a fairly small investment in time and resources.

Today the EPA took the next step to allow end-users to compare their energy conservation and efficiency efforts to those of their peers. Basically, any company the wishes to can audit their PUE, document their findings, hire a PROFESSIONAL (recognized audit partner) to verify their claims, and then submit to the EPA. Those data centers that rank in the top 25% of their peer group will be considered as having an 'Energy Star' compliant data center. (And the bragging rights that go with the star).

So what does this mean to the industry? Well, I think we'll hear alot of companies that applaud the move by the EPA for Energy Star data center recognition. Many companies have worked hard to eliminate energy inefficiencies and love telling the world about their successes. The new Energy Star rating will allow this message to be even louder, since it will provide some apples-to-apples comparison. It supports the ROI measurements for these efforts. Peers will get a sense of what is POSSIBLE by people doing like environments. Some CIOs and CFOs will stand up and say, "Why is my closest competitor X% more energy efficient making the same type of widget?"

We will also see a bunch of complaining about the use of 'PUE' as the main KPI used in the determination for Energy Star. The more vocal opponents will argue that PUE as a KPI is err'd from the start or meaningless and can be manipulated or contrivedby the unscrupulous. In turn, we'll see a resurgence of pushes for "DCeP" (or one of the 10 proposed proxies) as a better KPI from these nay-sayers. I say it's good to see more energy on KPIs like DCeP, but we need some forcing function, NOW! Rememeber, the goal is to get companies to ACT NOW... mid course corrections welcome!

I think PUE was a great first step. I think Energy Star for Servers and then Energy Star for Data Centers is a great SECOND step(s), but why would we be nieve to think all of this would stop there?

Energy Star for Data Centers is circa 2010. Perhaps the folks at EPA will have a Energy-Star-PLUS recognition in 2012 (they could call it "Energy Star for Data Centers 2012" or similiar nomenclature) based upon any potentially agreed upon proxy for DCeP. Or perhaps they would use a different metric/KPI? Not sure. But what I am sure is, that we need to force ENERGY EFFICIENCY PROGRESS NOW. For companies to stand up, articulate their best practices and be tested and challenged by their constituents. We all need to LISTEN and LEARN from each other.

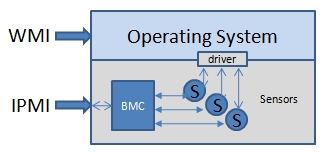

Status quo will no longer work. As an industry we need to push the design and re-architectures of existing space to be highly efficient. Too much waste in the past and nobody really understood it. We need to do the hard work, build containment aisles or modify air flow on on inlet-temp or overall pressure, we need to install sensors and monitoring, install spot cooling, refresh older hardware servers, etc. etc etc.

The energy efficiency work has just started, and it's a very long road ahead. Let's stay on track and work towards a common goal. Doing more with less, making every KiloWatt count, reducing the cost of doing business. Remember, we are all on the same planet, using the same resources.

The EPA's "Energy Star for Data Centers" 2010 is a GOOD thing...