Recently someone asked me, "What are the consequences of not adopting a DCIM solution for my data center? Does it really matter?" Good question; here's my perspective:

In its 2011 Data Center Industry Census, Datacenter Dynamics reported that data center operators still cite energy costs and availability as their top concerns. This insight--coupled with the fact that enterprises will continually compete to grow market share with more web services, more apps, and more reach (e.g., emerging markets)--indicates that data center operators need to run their data centers much more efficiently than ever to keep up with escalating business demands.

Therefore, the disadvantages of not using a DCIM solution becomes evident when enterprises can't compete because they are taxed by data center inefficiencies that curb how quickly and adeptly a business can grow; for example:

• Unreliable web services that frustrate customers

• Limited or late-to-market apps that hinder the workforce

• Unpredictable data center operating costs that squeeze profitability

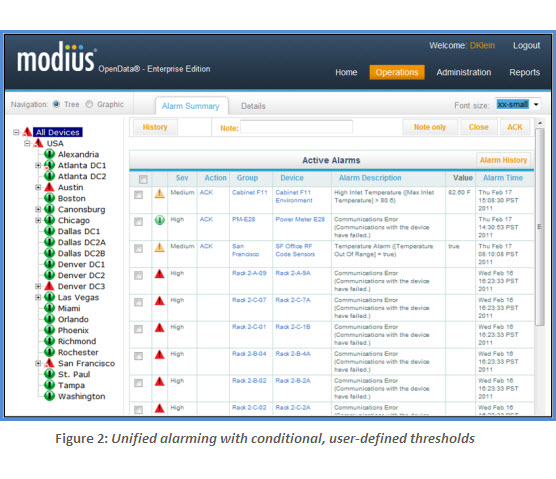

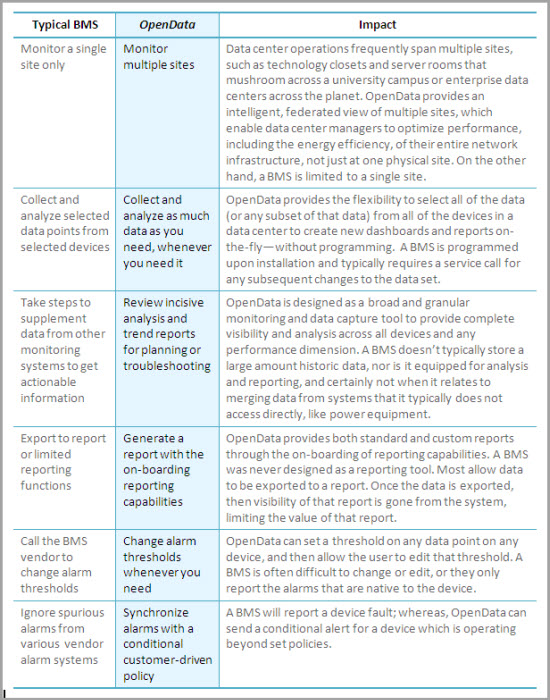

This is where OpenData software by Modius can help. OpenData provides both the visibility of and real-time decision support for data center infrastructure, so you can better manage availability and energy consumption. OpenData helps data center operators optimize the performance of their critical infrastructure, specifically the entire power and cooing chain from the grid to the server. For instance, with OpenData, you can arrive at a power usage baseline from which comparisons can be made to determine the effectiveness of optimization strategies. OpenData also provides a multi-site view to manage critical infrastructure performance as an ecosystem vs. isolated islands of equipment--from a single pane of glass. And, because OpenData monitors granular data for the entire power and cooling chain, you can validate--or invalidate--in near real-time whether day-to-day tactical measures to improve data center performance are actually working.

Modius OpenData has recently reached an intriguing milestone. Over half of our customers are currently running the OpenData® Enterprise Edition server software on virtual machines (VM). Most new installations are starting out virtualized, and a number of existing customers have successfully migrated from a hard server to a virtual one.

Modius OpenData has recently reached an intriguing milestone. Over half of our customers are currently running the OpenData® Enterprise Edition server software on virtual machines (VM). Most new installations are starting out virtualized, and a number of existing customers have successfully migrated from a hard server to a virtual one. Many enterprise IT departments are moving to a virtualized environment internally. In many cases, it has been made very difficult for a department to purchase new actual hardware. The internal “cloud” infrastructure allows for more efficient usage of resources such as memory, CPU cycles, and storage. Ultimately, this translates to more efficient use of electrical power and better capacity management. These same goals are a big part of OpenData’s fundamental purpose, so it only makes sense that the software would play well with a virtualized IT infrastructure.

Many enterprise IT departments are moving to a virtualized environment internally. In many cases, it has been made very difficult for a department to purchase new actual hardware. The internal “cloud” infrastructure allows for more efficient usage of resources such as memory, CPU cycles, and storage. Ultimately, this translates to more efficient use of electrical power and better capacity management. These same goals are a big part of OpenData’s fundamental purpose, so it only makes sense that the software would play well with a virtualized IT infrastructure.