While I've seen my share of some pristine new data centers over the past few years, as well as a huge number of large scale retro-fit projects where old centers are being turned into new usable data center space, I have also seen an alarming number of older 'house of cards' data centers that are up in modern production and appear to be 'hands-off'.

These data centers are typically chock full of older devices and interconnects that were passed down from generation to generation of IT managers, only to realize that what they inherited was unmanageable. While it is true that these data centers will ultimately find their way into extinction in a world focused on operational efficiency and pro-active management and best practices, we can all feel the pain involved when we encounter something like this.

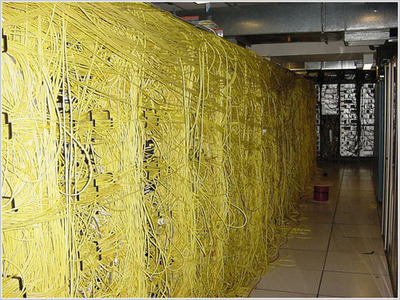

Above is one of the most interesting centers I've seen, and would appear to have conflicting priorities as to what is required to move forward. While I don't have a comprehensive sequence of steps required to migrate to a highly supportable, efficient and monitored data center, let me suggest one step that will help tremendously... Find the YELLOW patch cord and disconnect it.

Seriously, when I saw this photo I had to laugh and take a second look. Was it some new thermal blanketing technology? Or a way to eliminate blanking panels? The reason I make light here is that there are countless data centers that are in similiar out-of-spec designs and would benefit from adopting new data center technologies, new power distribution, cooling and monitoring solutions, but are challenged by WHERE TO BEGIN and the magnitude of the task at hand.

In the monitoring world for instance where Modius delivers value, we regularly find data centers with NO VISIBILITY to their energy usage and easily can identify hundreds or thousands of points of monitorable data that would help get energy usage under control. We are ready willing and able to take on chaos and make sense of it.